The Silent Killer: Understanding and Addressing AI Tech Debt in the SDLC

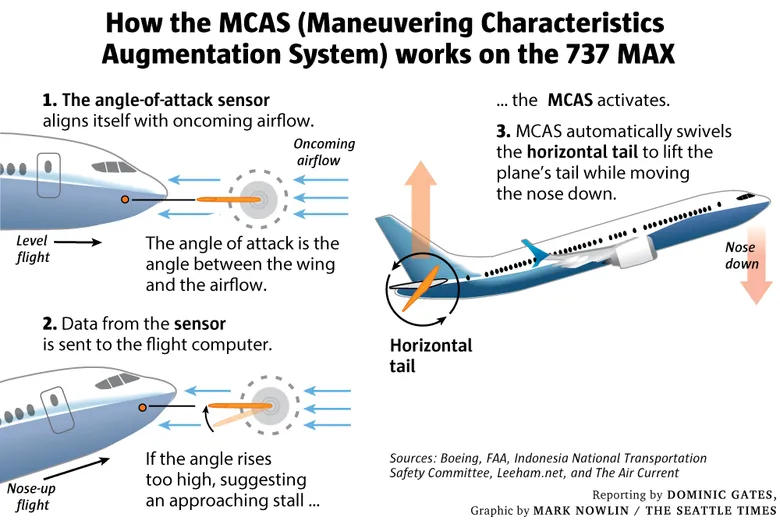

The $20 billion crisis of Boeing 737 MAX is a case study every engineering and tech leader must study to understand the true cost of automation & tech debt.

The tragic crashes of Boeing 737 MAX (Lion Air Flight 610 and Ethiopian Airlines Flight 302) exposed the critical problems in how automation was layered without fully accounting for system complexity, pilot understanding, and technical debt.

At the heart of it was the MCAS (Maneuvering Characteristics Augmentation System), which is an automated flight control system added to compensate for new engine placements.

But instead of solving the challenge, it creates more challenges due to…

- Shadow debt: A shortcut. Rather than redesigning the airframe, MCAS was added as a patch.

- Invisible blockers: Pilots weren’t fully informed about its behavior, limiting their ability to act.

- Workflow bloat: Automation piled onto legacy systems, creating fragility and compounding debt.

- Automation fatigue: Pilots ended up fighting the system instead of being supported by it.

The lesson is timeless: debt in automation or design doesn’t grow linearly. Small issues interact, compound, and escalate in unpredictable ways.

Now, zoom out to software engineering in 2025. We’re seeing the same pattern repeat, this time with AI in the SDLC.

Teams adopt AI tools to move faster. But without careful governance, context, and observability, AI introduces its own version of shadow debt, invisible blockers, workflow bloat, and fatigue.

This is the new reality and challenge: AI Tech Debt.

What is AI Technical Debt in the SDLC?

Think of a CI/CD pipeline that once felt sleek and efficient.

But now you have replaced some of its working tools with AI tools that take care of code generation, auto-reviews, or release automation.

At first, it feels like superpowers. But over time, the cracks begin to show.

Models drift, reviews lack context, AI-generated code introduces hidden flaws, and debugging takes longer than writing from scratch.

What was meant to save time starts creating new bottlenecks and invisible risks. That’s AI technical debt.

In short, AI tech debt refers to the accumulation of inefficiencies, fragility, and oversight gaps resulting from the unchecked use of AI throughout the software delivery lifecycle.

Key Aspects of AI Tech Debt in the SDLC:

- Model Decay and Drift: AI models lose accuracy as data changes, but teams often skip retraining or monitoring, and this leads to unstable outputs.

- Black-Box Decisions: AI-generated code or reviews lack transparency. Developers can’t always explain or validate why a change was made.

- Over-Reliance on AI Outputs: Teams accept AI suggestions without human scrutiny, which builds hidden flaws directly into production systems.

- Unmaintained AI Integrations: Agents, scripts, or connectors tied to older APIs or models break over time, which requires constant firefighting.

- Lack of Observability: AI systems fail silently. Without robust monitoring, errors compound before anyone notices.

Complexity Creep: Adding AI into every step of SDLC without reconsidering workflows creates dependency-heavy pipelines.

AI Debt vs. Tech Debt: The Two Big Elephants in the Room

AI-driven debt is not just another name for tech debt. While they overlap, they create challenges in very different ways.

When both debts appear together, they reinforce each other in damaging ways:

- Slower Development: Fragile AI on weak code causes repeated delays.

- Vicious Cycle of Errors: AI flaws worsen code issues, breaking pipelines.

- Harder Debugging: Poor visibility in code and AI slows fixes.

Developer Frustration: More cleanup, less innovation.

How Does AI Debt Lead to Compounded Tech Debt?

Traditional tech debt is measurable.

It’s often measured as the cost of fixing maintainability issues versus the total cost of development.

But when AI-driven debt co-exists with tech debt, those formulas fall short.

Here’s how AI debt can snowball into compounding tech debt and make your SDLC highly inefficient:

1. Brittle AI Test Automation

- AI Debt: AI tests miss edge cases and adapt poorly to code changes.

- Tech Debt Compounding: More bugs slip in, QA cycles stretch longer.

2. AI-Created Deployment Risks

- AI Debt: AI-driven changes often skip guardrails.

- Tech Debt Compounding: Releases need frequent hotfixes.

3. Black-Box Builds

- AI Debt: AI tools hide logic and dependencies.

- Tech Debt Compounding: Pipelines break often, patches stack up.

The takeaway is clear: AI debt doesn’t just coexist with tech debt; it multiplies it. Worse, it pulls engineering teams into firefighting mode.

Highly Probable: You Add AI to Reduce Tech Debt, and End Up Creating AI Debt

AI is often introduced with the promise of reducing effort, speeding up reviews, and preventing errors. Yet, without discipline, it quickly becomes another source of debt.

- AI Hype FOMO: Tools adopted for trend, not fit.

- Misaligned Models: Models are outdated or not fine-tuned.

- Performance Bottlenecks: Integrations slow down builds instead of speeding them up.

- Lack of Scalability: AI setup crumbles as workloads grow.

- Poor Change Management: AI not retrained as codebase evolves.

- Automating Symptoms, Not Causes: AI patches problems without fixing roots.

Why AI Fatigue is a Key Early Warning Sign of Accumulating AI Debt

AI fatigue happens when developers lose trust in AI systems that are supposed to help them but instead add complexity and frustration.

Instead of reducing effort, poorly governed AI tools generate unreliable code, confusing test results, or unexplainable changes.

Once trust is gone, leadership struggles to scale AI use across teams. Delivery slows, innovation stalls, and business objectives get stuck.

A hidden culprit here is workflow bloat, when AI adds more steps, manual checks, or redundant processes instead of simplifying them.

Early Warning Signs of AI Debt:

- Frequent build or test failures due to AI-generated errors

- Slow execution times in pipelines where AI adds overhead

- Over-reliance on AI testing with poor coverage

- Manual interventions are required to “fix” AI-driven steps

- Growing complexity from layered AI agents and tools

- Outdated or misaligned AI models are still in active use

- Difficulty scaling AI use as systems or teams grow

- Rising tech debt in the codebase from AI shortcuts

- Low developer confidence in AI suggestions (AI fatigue)

The Role of Code Governance and Context-Aware AI Code Reviews in Reducing AI Tech Debt

If AI is joining your engineering team, governance is the backbone for safe adoption. Without strong rules and review habits, AI-driven changes sneak into production, and debt piles up quietly.

Why Code Governance Matters:

- Clear Accountability: Every change, whether human- or AI-generated, needs traceability, who authored, who reviewed, and under what conditions it was approved.

- Guardrails for Autonomy: Governance frameworks ensure that AI systems never operate without human oversight where risk is high.

- Policy Enforcement: Rules for testing, documentation, and approval processes keep both traditional tech debt and AI debt under control.

AI-Enabled, Context-Aware Code Reviews:

AI-generated code introduces a different kind of risk, logical correctness, domain-specific compliance, and long-term maintainability. Context-aware review tools step in here:

- Deeper Validation: They check if AI’s changes match business logic and fit into the architecture.

- Explainability: They show why a code block is risky or inconsistent, so devs trust the feedback.

- Policy Integration: They enforce coding, security, and compliance checks by default.

- Continuous Learning: They learn from past merges, rollbacks, and incidents, adapting to your codebase over time.

The Combined Impact:

- Prevent AI from introducing hidden flaws into production.

- Catch risks earlier in the SDLC, before they turn into expensive fixes.

- Reduce cognitive load on developers, cutting down on AI fatigue.

- Build trust in AI systems by making their decisions transparent and verifiable.

The Final Answer to AI Tech Debt

AI brings speed. But if left unchecked, it also brings hidden AI debt. The real challenge isn’t just spotting where that debt forms, but making sure it doesn’t grow in the first place.

That’s where Hivel steps in.

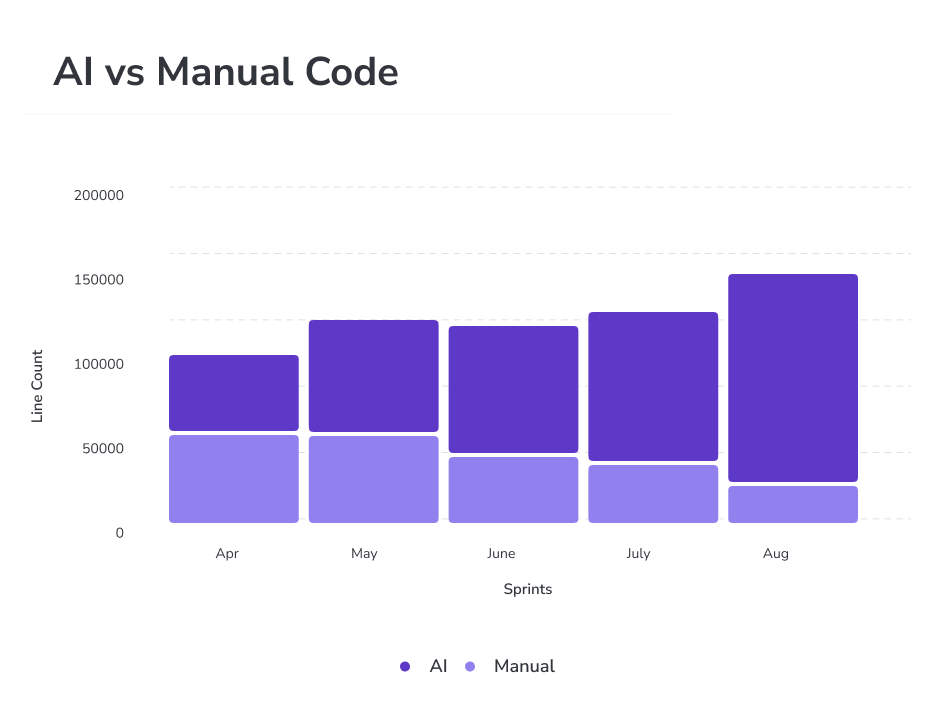

- See what AI is really doing. Hivel breaks down AI vs. manual code so you know exactly how much your teams depend on AI, and where it helps or hurts.

- Measure adoption with proof. With Copilot and Cursor analytics, you can see usage trends, acceptance rates, and which teams are getting real ROI from AI.

- Spot the patterns. High, medium, and low AI usage cohorts highlight your trailblazers and laggards. Leaders can double down on what works and coach where needed.

- Tie it back to outcomes. From DORA metrics to defect density, Hivel connects AI usage directly to delivery speed, quality, and risk.

- On top of that, Marco, Hivel’s AI Code Review Agent, closes the loop. It joins every PR, adds context-aware feedback, enforces standards, and ensures AI-written code doesn’t slip into debt or security gaps.The result is simple:

- You adopt AI with clarity, not guesswork.

- You prevent AI-created debt before it compounds.

- You scale faster while keeping quality and trust intac

Book a Demo to Detect Invisible AI Tech Debt and Get Rid of It

.png)

.png)