Why High Rework Rate is a Hidden Risk in AI-Driven SDLC

The spinning wheels problem in modern software engineering is becoming more real.

You’re pedaling hard on a stationary bike that keeps speeding up, legs burning and sweating more, but not really moving anywhere. The efforts are more each time, constantly in motion, but the scenario never changes.

In other words, you keep shipping, keep fixing, and keep re-shipping. The sprint board, of course, stays green, but the roadmap? Well, it barely moves. Everyone seems busy, but progress feels stuck somewhere between two giant rocks.

That’s exactly what rework feels like. It’s the difference between a spinning wheel on a stationary bike and riding a real one that actually takes you forward. It is a silent productivity killer even for the most well-equipped and well-versed engineering teams on this planet.

The problem with rework is that it does not break or crash anything, so you can’t identify it easily. It just quietly burns time, focus, and morale.

And just when we thought engineering analytics dashboards would finally rescue us from it, a new layer appeared.

The rise of AI in SDLC promised a new era of speed and precision. However, it also amplifies whatever’s broken in the process. When rework creeps into an AI-driven pipeline, it compounds and does not just repeat.

As per the latest research paper published in the International Journal of Software Engineering & Applications (IJSEA), “Software specialists spend about 40 to 50 percent of their time on avoidable rework rather than on what they call value-added work.”

Their very alarming finding was, “Rework is a prevailing scourge as it consumes up to 40 to 70% of a project’s budget.”

Thus, considering its gravity, let’s discuss it in more detail.

Why Rework Stays Invisible - The Camouflage Advantage Rework Has

Rework has some bizarre characteristics. It looks like progress, and that gives it a perfect camouflage opportunity to remain undetected. In AI-SDLC, that camouflage is stronger than ever.

The system shows every reopened ticket, every small fix, every post-release patch as more work done.

Making it worse, most engineering teams don’t track rework directly, or rather, they don’t have the visibility to separate new value from recycled effort. They track speed - story points burned, commits pushed, PRs merged. But the problem with velocity is - it only measures how much you moved, and not how many times you went into the same circle.

It’s like a manager reading a factory report that says “100 cars built,” but not aware of the fact that 30 had to go back on the assembly line.

The deeper problem is cultural. We normalize rework by saying, “It’s just another bug.” “We’ll fix it in the next sprint.”.

Rework is even more likely to remain hidden in AI-SDLC for a few reasons:

- Faster cycles mask repetition - AI speeds up coding, thus repeated fixes can happen before anyone notices a pattern.

- AI-generated code hides intent - Misalignments in AI suggestions create hidden correction loops.

- Traditional metrics mislead - Story points, commits, and PRs look healthy even when much of the effort is rework, caused by AI-assisted coding.

This is how rework would actually look in AI-SDLC.

- AI Suggests Code: Developer A uses an AI tool to auto-generate a new feature.

- Integration Misalignment: The snippet works, but conflicts with existing modules.

- Hidden Fixes: Developer B applies small fixes; automated tests flag an edge case.

- Multiple Iterations: A few more tweaks happen before the PR finally merges.

- Dashboards Don’t Reveal It: Story points and commits still show a “completed feature”.

The reason managers always miss it is simple - engineering work is still a black box. Traditional software engineering analytics tools weren’t built to tell that AI story. They only give you volume, and not intent.

So, the essence is, it’s your culture, vanity metrics, and the black box problem of engineering that gifts the perfect Camouflage Advantage to rework.

What Rework Really Costs You (something you can’t fix right away!)

Imagine climbing the same mountain twice. And you’re climbing it 2nd time because in your first attempt, you forgot your wedding ring over there. You would never call this an adventure, because it costs you extra time, money, energy, and most importantly, the wedding!

Keeping context around rework, well, rework isn’t just about fixing bugs or systematic issues around bug escapes and unpredictable releases. It’s a toll you pay for rushed work, unclear ownership, and missing context.

And the way it harms you is very ironic. It shows up later when you are already buried under extended cycles and missed releases with tired engineers.

So, morale damage is the first hefty cost you pay. Because, let’s simply accept this - engineers hate going back to code they thought they’d closed.

And when your engineers are not happy, your clients can easily sense it, which leads to them losing trust.

But you collect your confidence, wisdom, team, and you stick to your plan. You are ready to fix it, no matter what it takes - money and time.

However, the irony is, even if you spend more time and money to fix the issue, fixes over fixes add layers of complexity, which makes future changes riskier and slower (the mighty tech debt even the greatest CTOs are afraid of!).

So, simply put, the cost of rework can not be measured in time and money. In fact, it is beyond measurable, at least in numbers!

Recognizing the Warning Signs (Metrics) of High Rework Rate

Rework is quiet, but it leaves fingerprints.

Traditional Metrics:

1) Reopen Rate: Tickets or PRs reopened multiple times in a sprint or even after that.

2) Bug Escape Rate: Defects slipping past QA into production.

3) Defect Injection Rate: Number of new bugs introduced per release or module.

4) Defect Density: An increasing number of defects per line of code highlights risky areas and repeated fixes. (“If the metric consistently exceeds 2.0 defects per KLOC (thousand lines of code), it often suggests the software requires immediate attention and improvement before release.”)

5) Burndown Chart Irregularities: Flat or jagged lines mid-sprint can be a sign of repeated work.

6) Code Review Churn: PRs cycling multiple times before merge.

7) Persistent tech debt: Refactors and cleanups are always on the backlog.

8) Frustrated engineers: Easily visible in dropping focus, creativity, and flow of your engineers with more WIP.

AI-Specific Metrics:

AI metrics themselves don’t directly measure rework, but they can be indicators or proxies that reveal patterns.

1) AI Suggestion Acceptance Rate: Percentage of AI-generated code or fixes accepted without modification. Low acceptance may indicate misalignment or hidden rework.

2) AI Iteration Count per Task: Multiple AI-assisted attempts before a merge can signal repeated fixes, which can be a form of rework amplified by AI.

3) AI-Generated Bug Rate: Bugs traced back to AI-assisted changes. Bugs coming from AI-assisted changes show that some of the AI work needs correction. This can be another sign of rework.

4) AI Contribution vs Manual Workload: If some developers rely heavily on AI-generated code while others do mostly manual work, it can create hidden rework hotspots.

By combining traditional engineering metrics with AI-specific metrics, teams can spot hidden rework even in AI-SDLC.

Hivel offers a great feature to measure, optimize, and master AI-assisted code development. Know more about Hivel’s GenAI Adoption Analytics.

How the Best Engineering Teams Reduce Rework

They treat it like a system issue, not a personal failure!

Here are more details.

- Make Work Transparent

They make their work transparent by breaking the black box. They track every PR, ticket, and bug with meaningful context and metrics. And with this, they turn hidden rework into visible signals that eventually give managers and leads the context to act early.

- Shift Left with QA

Testing doesn’t just happen at the end. They make sure that code quality checks, automated tests, and peer reviews happen as early as possible. Because they know catching defects before it is merged reduces the rework dramatically.

- Limit context switching

They avoid having too many open tasks, which always causes context switching and mistakes. They cap WIP, focus on finishing items, and avoid juggling too many stories at once.

You should also read >> Quantifying the Cost and Finding Solutions of Context Switching

- Measure What Matters

They don’t just measure and track velocity. In fact, they actively monitor metrics like Defect Injection Rate, Bug Escape Rate, Reopen Rate, and MTTR. These metrics guide prioritization and expose patterns early.

- PR Hygiene & Best Practices

They follow PR Hygiene by submitting small (under 400 LOC), well-scoped PRs with clear descriptions, linked tickets, and automated checks. Clean PRs prevent confusion and minimize review churn.

- Address Code Review Bottlenecks

High review queues or overburdened reviewers create scope for unnoticed bugs passing through the review cycle. Thus, top teams distribute reviews strategically, rotate ownership, and automate the first review with a context-aware AI code review agent.

- Integrate AI-SDLC Workflows Thoughtfully

Don’t consume AI as a speed tool; consume it as a part of your workflow. The best teams track AI-generated changes separately. They identify areas where AI truly adds value, find early signs of repeated corrections, and adjust processes or training. Ultimately, your goal should be to keep both human and AI workflows aligned and efficient.

How Hivel Links Quality & Productivity and Turns Your Engineering Black Box into a Clear Picture?

Hivel is designed to open the black box, even in modern AI-driven workflows!

You know the feeling. You glance at the dashboard and everything looks fine. But still, somehow you’re stuck and spending too much on something which you can’t see, can’t measure, can’t track, and thus, can’t improve.

Hivel changes that.

Unified Dashboard (Cockpit): One single command‑center view showing context-rich insights curated by fetching data from Git, Jira, and your CI/CD systems. Outcome? You get real‑time visibility into cycle time, pull requests, rework, and tech debt.

PR and Review Metrics: You get to know large PRs, review churn, time to merge, and how much rework is hiding in the review cycle - including corrections arising from AI-assisted code.

Quality + Productivity Link: One classic scenario is - shipping more might be costing you hidden rework. But how do you know it? Well, Hivel tracks defect density and rework, shows change failure rate, MTTR, other metrics, and correlates code review & hot‑fix data to give you clear visibility into where quality is breaking down and productivity is leaking.

Drill‑down, Not Just Dashboards: Go from team → repo → specific PRs to uncover the exact blockers and inefficiencies, whether human or AI-driven.

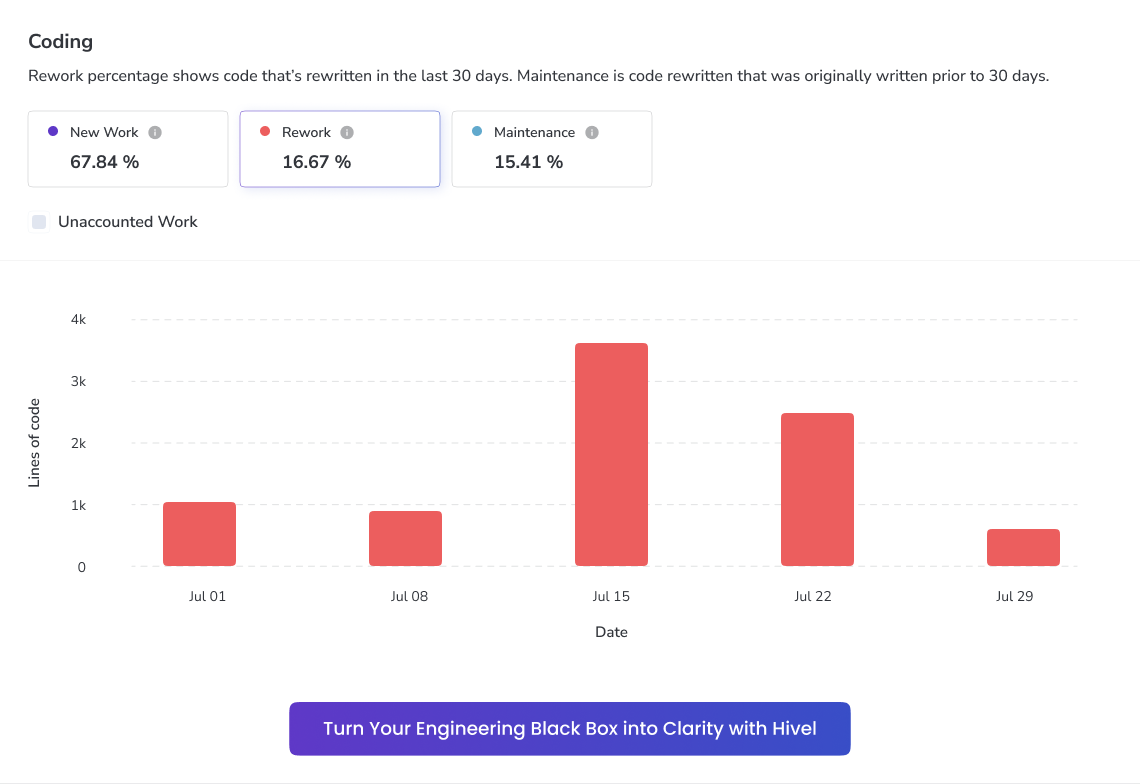

Smart Code Work Classification: Hivel breaks down your code changes into New Work (fresh code), Rework (fixes or changes to code written in the last 30 days), and Maintenance (refactors, dependency bumps, and general housekeeping done after 30 days). This clarity helps engineering leaders spot imbalances fast.

.png)

.png)